If you know me, you know that we take a lot of pictures. You’ll also likely have heard me say something along the lines of “There will be a whole generation of kids who grows up and as adults have no photos of themselves when they were young.” Or something like “There are only two kinds of photos online: The ones that never go away (that kegstand photo that shows up at your job interview) and the ones that disappear forever (your baby’s first birthday pictures).” I really do believe this to be true. Think about all the people you know where the only pictures they have of their kids are on their cell phone. When the phone is lost so are the pictures. Or photos are stored on a single hard drive that crashes. Or photos that never get off the memory card of the camera. At least with film you could always find that shoebox in the attic full of your childhood photos.

So as part of my paranoia about losing the photos we take a couple of precautions. First we get the pictures off the memory cards and onto the computer as soon as we can. Then we back the whole computer up using Carbonite. I’ve talked about it before. This includes all the photos. It gets the photos themselves off site in case the computer is stolen or hard drive crashes. Next we upload all of the photos to Flickr. It acts as both a backup system and of course a way to share our photos. When we upload to Flickr we also add tags and descriptions.

It occurred to me a couple of weeks ago that all this additional data, the metadata of tags and descriptions, is almost as import as the pictures themselves. With all that metadata we can search for and find photos which would be impossible without it. We can search for and find photos that have Wesley and Jillian swimming and find them all in just a few minutes. Want only the ones that also have Amber? No problem. But what if Flickr goes away? They might shutdown or kick us off for no reason at all. It happens with web services all the time. Then we’d have copies of the pictures on our computer and backed up off site, but I’d have no way to find a specific picture and we’d also have no idea what was happening in those pictures.

I decided we needed a local copy of the metadata as well. Luckily Flickr has an awesome API. You can programmatically interface with all your images and data. I decided that I’d use Python to do the heavy lifting and (Beej’s) Python Flickr API kit to interface with Flickr. I wrote my script (download at the bottom of this page) using ActiveState Python version 2.6.1 on Windows Vista, but it should work on a wide variety of systems.

The program downloads the most critical information about each photo, but not the photo itself. A lot of variables are set near the top of the script and are easily and safely edited by the user. These control things like the name of the database file (it uses SQLite for persistent storage) and date ranges (upload dates) of photo information you want to download. It handles exporting the data to a CSV file (easily loaded into an Excel spreadsheet) and a few database management tasks.

Prerequisites: You’ll need these in place to begin (and they are all free!)

- Python – I use ActiveState Python for Windows. It is free and easy to install

- Python Flickr API kit – instructions for install and other documentation are here.

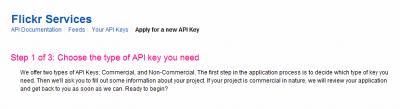

- A Flickr API Key – request one from Flickr – the non-commercial is what you want

- Your Flickr User ID – you can find that here, it is at the top of the right hand column

What it can do:

- Download the photoID (flickr’s unique ID for each uploaded item), the file format (jpg, gif, etc), tags, descriptions, photo upload and taken dates, and the URL for the photo on Flickr. Right now it only downloads photo information, not other media.

- Export the information to a CSV file you can open with Excel and use however you like

- Handle basic database management like removing duplicates and compacting the database

How you use it:

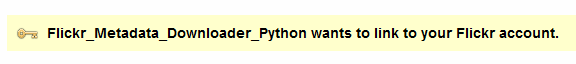

- Once you have your Flickr Key insert the information they give you along with your UserID into the script near the top of the Variables section. You have to have this setup to download your photos. The first time you run it you will be asked to authenticate this application on your account. A browser window will launch automatically and taking you to Flickr.

- Edit any information you want to change (date range perhaps) and then run the script (either from the command line or by double clicking the icon). It will proceed to create your database (if not already there), query Flickr for photo IDs, check to see if you already have them in your database, and download your new data. My experience has been that I am able to download information on about 10,000 photos before my connection to Flickr fails. Sometimes it is more, sometimes I only get a few thousand. Luckily because the program writes info to the database nothing is lost and you don’t have to start fresh. Just launch the program again and it will pick up where it left off. It took me four or five runs to get everything about all 36,000 photos we have online.

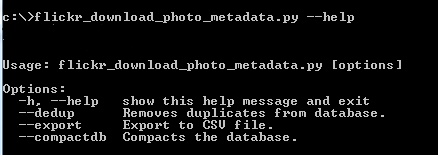

- To export data to a CSV file run the application from the command line using the “–export” flag.

- To shrink the database use the “–compactdb” flag at the command line. You only need to do this if data has been removed.

- To remove any duplicates use the “–dedup” flag at the command line. You shouldn’t have duplicates, as it should skip them during download, but just in case.

- You can use the “–help” command line flag to pull up brief help.

What’s Coming:

The program isn’t perfect (but I ask you, who among us is?) and I already have a long list of things I’d like to improve. I just wanted to get it out there since it works even if it isn’t pretty. I’m sure the code could be cleaned up a lot. It currently stands at almost 400 lines about 120 of which are comments (mostly so I can remember what the heck I was thinking when I wrote each bit). Some of the improvements and new features are found as comments in the application itself including:

- Catch errors and exit more gracefully. Currently it just spews out errors and exits hard.

- Get it to restart a download after Flickr errors a given number of times.

- Retrieve comments left on photos, security settings, and exif data.

- Move user configurable variables to .ini file using ConfigParser module.

- Once the .ini is done it might be possible to create this as .exe so you wouldn’t need to install Python or anything else.

- Use indexes in the db to speed up some of the query and export processes. I’ve played with this a bit and using indexes has sped my export from 10 minutes to 10 seconds.

- Add a command line option for statistics (# of photos, unique tags, etc).

- Add a command line option to allow removal of data about a single photo id (remove photo, tags, etc) or range of ids. By date range too.

- Converting the Unicode characters to ASCII or UTF8 so some characters in descriptions (and presumably comments) render correctly. Flickr uses Unicode, so does the database, but the module that writes the CSV file doesn’t.

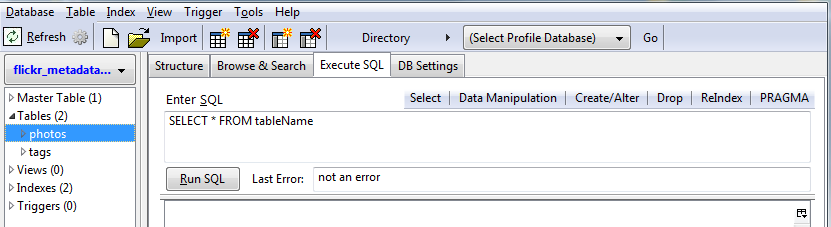

SQLite Manager:

One of the best things about this application is that it uses an SQLite database. This is the same kind of database used by applications like the FireFox browser to store your bookmarks, Skype, the iPhone, etc. They claim that it is “the most widely deployed SQL database engine in the world”. With the data in the database you can interact with it using Transactional-SQL to do data manipulation like you never could in a spreadsheet. And the best way to play with all your newly downloaded data is to use the SQLite Manager plugin for firefox. It offers a graphical way to browse, query, and alter your database. It is easy to use and you can use it to peak into all the various SQLite databases residing on your computer you didn’t even realize were there. You might even learn a little SQL. Be sure to make a copy of your database before you go messing around.

DOWNLOAD: Flickr MetaData Downloader (1328) (right click and save as)

Notice wishlist includes grabbing user comments – curious if you have made progress there?

Have approximately 16k product images on flickr – idea was to have team review images and key appropriate internal product id in the user comments field. The product ids would then be used to map images back to our erp and ecommerce offering.

Intent was to use Lightroom 3 publish function to grab comments, and then use metadata explorer from pkzsoftware to output metadata to flat file.

But, the .lrcat file structure has changed in lr3 to the point that metadata explorer cannot read.

Unfortunately, my technical skill precludes me donating anything other than dollars to the cause. Let me know if you have an interest in any of the above – either way – nice work…

Apologies if this is a semi duplicate comment – chrome choked when i hit the submit button…

Nice work on the metadata downloader – curious if there’s been any progress on grabbing user comments?

May have a proposition for you – let me know if you have an interest.

was trying to download your ‘Flickr database downloader’, but the link didn’t seem to be working anymore?!!

@Connie: Sorry about the bad link. I think I have it working now.

A HUGE thank you for posting this, it was a huge life saver for me. I also wanted to get the photoset data for each photo so I added it to your code.

You can find a write up of it at the link below. I’m happy to send you the file if you’d like.

http://drewtarvin.com/business.....-csv-file/

Looks like the API has changed. I got it to work by adding this line after the call to FlickrAPI:

flickr.authenticate_via_browser(perms=’read’)

and then commenting out the calls to get_token_part_N

It let me validate the key, and then crashed for other reasons that were my fault. After I fixed those, it seems to be working.